Most of us cannot imagine a day without our smartphone anymore, using apps to surf the web, listen to music, or play games. Therefore, besides countless developers, numerous testers also work on mobile terminals. The question is, do testing methods change because of the new platforms?

In the beginning…

About two years ago, I had the opportunity to immerse myself in the world of mobile testing within the framework of a client project. A small team at Saxonia Systems (since 03/2020 ZEISS Digital Innovation) had started developing an iOS app. Even then, an item on my software tester agenda was: “One day, you’ll do something with apps.” And since I had always had an affinity for Apple and their high quality standards (and I own a variety of their products), I did not hesitate to accept.

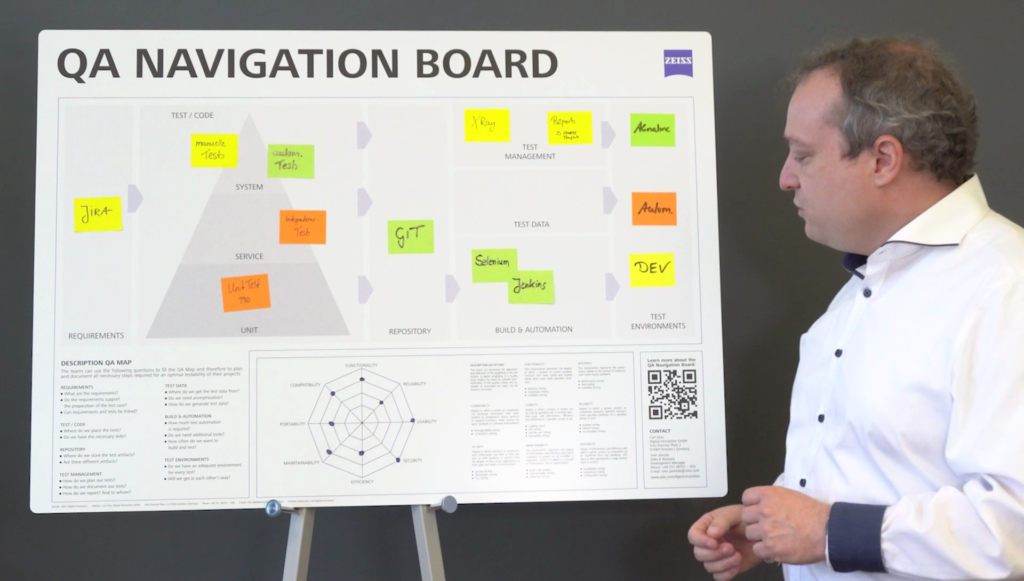

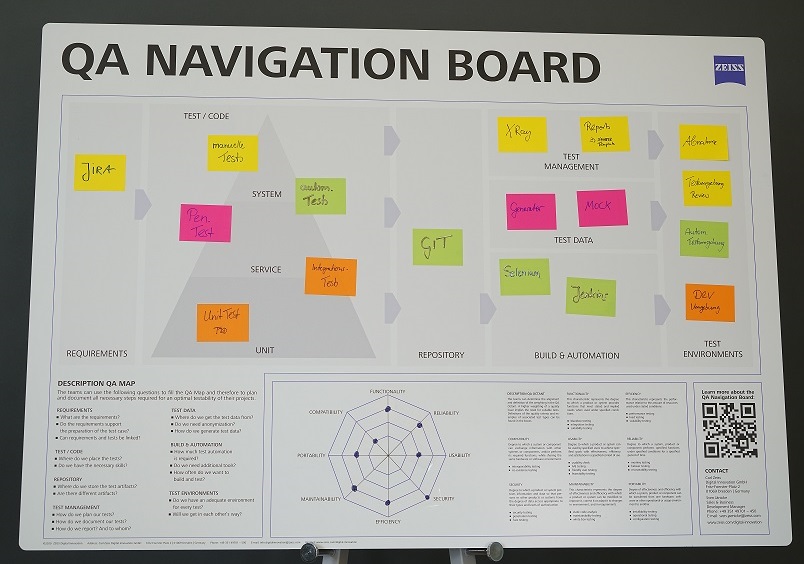

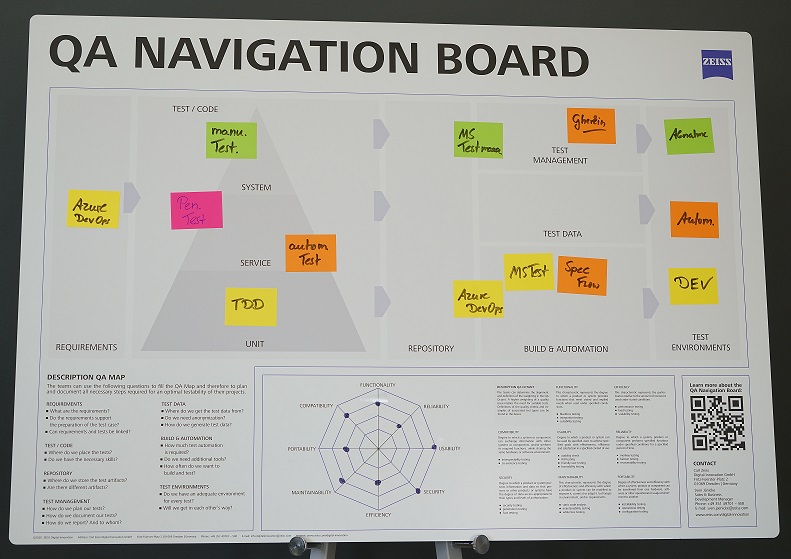

From then on, I was supposed to accept the development team’s sprint result for the client every two weeks. The onboarding was quickly completed. Within hours, I had the names of the contacts within the development team, an iPad on my desk, and ready access to JIRA.

And now: Start testing!

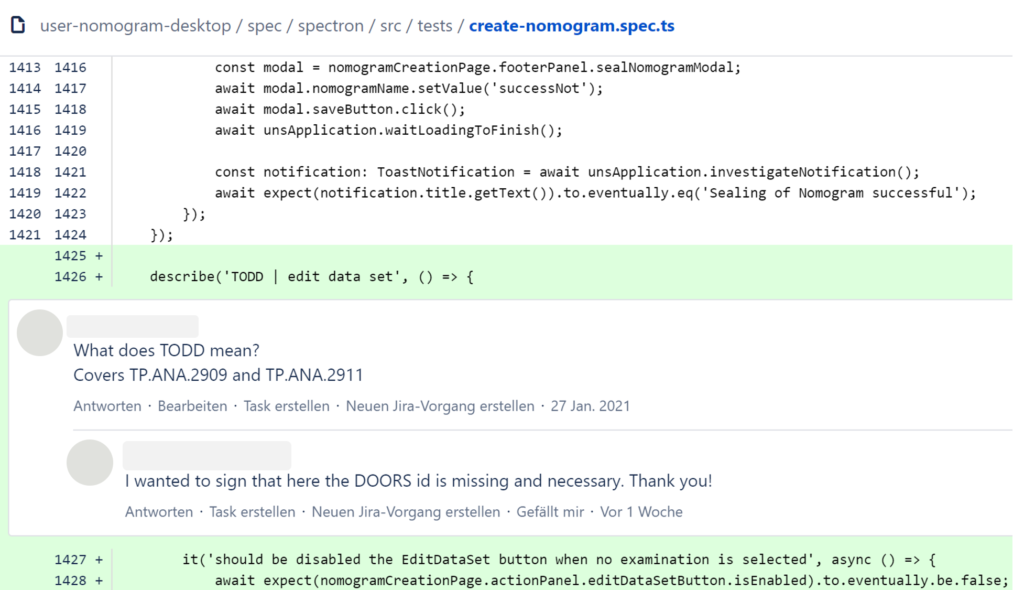

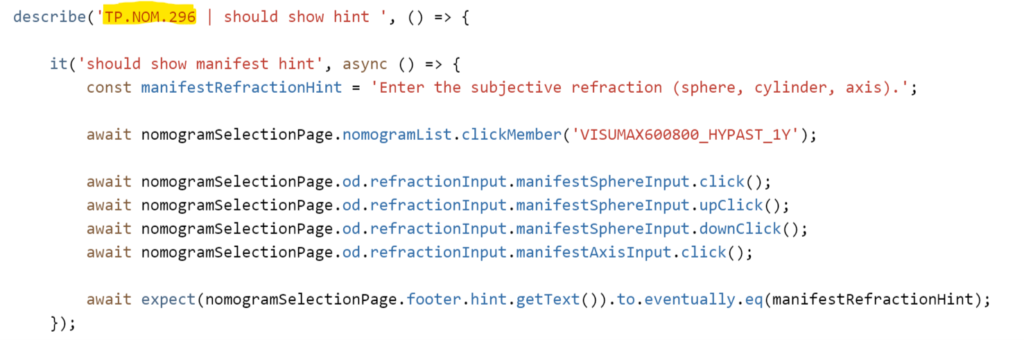

The test case specifications were very similar to what I was used to. Over the course of the development of the app, the client introduced the JIRA plug-in Xray. Anyone who has worked with JIRA before and knows the specification of test cases from other tools will quickly manage this plug-in. Since I had worked with several test management tools and with JIRA before, my learning curve with respect to Xray was quickly conquered, and soon enough I had specified my first test cases for the acceptance test.

According to the specifications, the acceptance environment was always to be equipped with the latest iOS version, reducing the combination of iOS releases and simplifying the tests. Until then, I had had to pay attention to which operating system and which service pack were installed in order to ensure that the software was supported. In the mobile sector, and with iOS in particular, the users’ quick readiness to update makes the acceptance test somewhat easier because the test artifact has to work with the current iOS version at all times.

New challenges

Now, how to transfer the sprint result to my iPad? In my previous projects for this client, all of which were restricted to desktop applications, I found an installer of the software to be tested on a shared drive every morning. I installed it on my virtual machine and was ready to start testing.

Now I was supposed to install an unpublished app on my iPad, but how? I contacted the Saxonia development team, and they told me what to do. All I had to do was give them the Apple ID used on my iPad, and to install the iOS application TestFlight. TestFlight is an online service of Apple that allows developers to make their apps available to a select group of users for testing prior to the official App Store release.

The development team added my Apple ID to the pool of app testers. I accepted the subsequent invitation in my electronic mailbox, and promptly, the latest version of the app was available to me in TestFlight. One click on “Install”, and the test artifact was downloaded and installed on my iPad. From then on, TestFlight automatically notified me by push email whenever a new version was available for testing. Gone were the times when I had to look for a new build for installation on the shared drive every morning. One glance at my iPad, one click, and I was ready to go. The provision of the test artifact was much more convenient than I was used to from other projects.

Here we go!

The day of the first acceptance arrived, and I was very excited to get to work at last. I could not have been better prepared: The specified test cases were ready for execution, the iPad’s battery was fully charged, the latest app version was installed, and a soft drink was at hand. So let’s get going!

But what was that? I had only just executed the first test steps when something deviated from my test case. I had found a deviation from the acceptance criterion. Consequently, I created a bug from the test execution, clearly recorded all the steps for reproduction, and was going to add a screenshot for better visualization. And that was where I faced a problem: How do you get a screenshot of the iPad into the bug report?

On the PC, that was simple: Capture the screen, save it, and attach it to the ticket. But how do you create a screenshot on the iPad? And how do you send it to JIRA? Being an experienced iOS user, I quickly found a way to create a screenshot, and soon, I had it on my iPad. But then I had to think about how to transfer the screenshot. I considered the following options:

- Send the screenshot to myself by email

- Upload the screenshot to an online storage space and download it to the PC

- Use the data cable and connect the iPad to the PC

I chose the data cable, and from then on, I diligently transferred my screenshots to JIRA.

With mobile testing, documenting bug reports (with screenshots) was different than with desktop or web applications. Back then, this meant that bug reporting was more arduous. Today, I work with a MacBook, and I can easily share and transfer screenshots of mobile terminals by means of Apple’s AirDrop.

I was able to complete the acceptance test without further deviations from the target state, and I was happy to see a lot of green test cases. The development team took the bug report into account in the next sprint. The screenshot that had been so difficult to document was much appreciated and helped correct the deviation. Thus, it was worth the effort.

Done!

It was easy for me to reach a conclusion after the first mobile acceptance test. Thanks to my previous project experience, and being trained in the art of software testing, I found my way around in the world of mobile testing quickly. There are always challenges when new technologies are being discovered—but that does not mean you have to reinvent the wheel. Tried and tested processes and methods can be used without difficulty. My affinity for mobile applications and devices certainly gave me an edge in exploring this new world, but I can only encourage every tester to get involved in this exciting field.

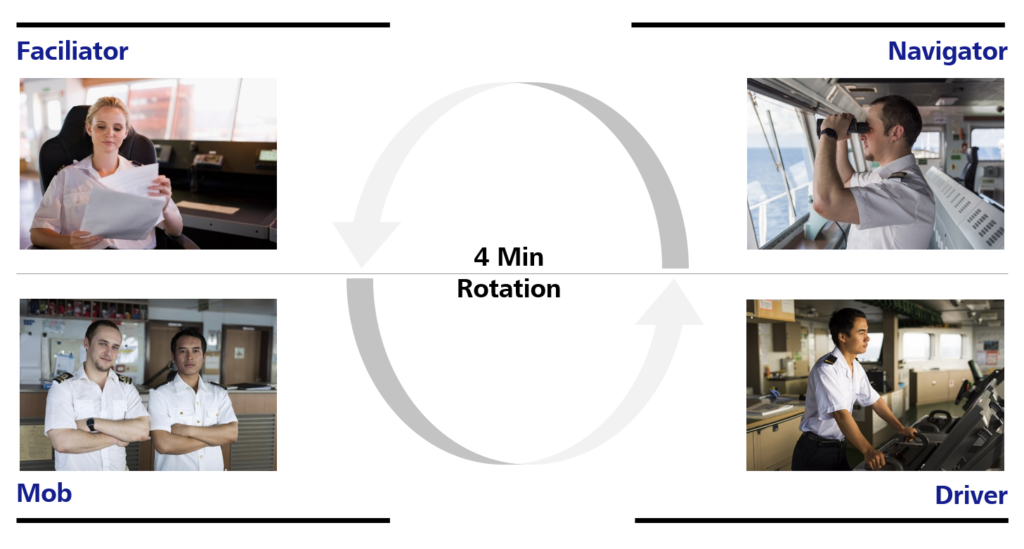

Today, I am no longer working on the acceptance side, but I have become an established member of the development team, and, in addition to the acceptance test of the stories, I am also responsible for the management of a variety of test devices and their various operating systems, test data management, and automated UI tests. I will tell you about the challenges in these fields in the next post.