Critical errors that become evident only in live operation constitute a major financial risk, and they also mean negative publicity both for the product and the companies involved. This is why testing is a fundamental, integral part of modern software development. High test coverage and prompt feedback of the test results allow for the quality and maturity of the product to be appropriately documented and confirmed.

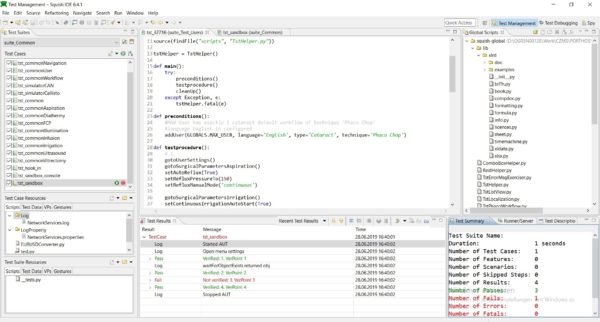

Using test automation tools constitutes a solution that enables quick execution of such tests and meets the requirements of modern development projects. These tools work according to the principle of tool-based collection of information via the graphic interface of the product to be tested, which enables the automated execution of scripted interactions, and, as a result, the assessment of the respective application.

Test automation tools ensure quick and continuous feedback regarding the quality of the software to be tested. But there are some points that have to be observed when using them. There are various tools available on the market which use different approaches as to how they are integrated into the development and testing process, or which technologies they support. The efficient use of a test automation solution depends primarily on the engine used to control the graphic interface. This engine has to optimally support the technology to be tested. Development projects using “new” technologies such as Angular2 in particular face the problem that available and familiar tools are not always the same state of the art as the technology on which they are used.

CLINTR project and testing with Protractor

We use Angular2 as the development framework for our current software development project, Clintr, and we wanted a high frequency of automated test cases from the start. Clintr is a web application that alerts service providers to prospective customers in their contact network. For this purpose, it uses and analyzes data of the provided XING API to derive a demand for services in companies according to defined criteria in a fully automated manner. If a demand for services in a company has been identified, Clintr searches the service provider’s network of contacts (e.g. XING or CRM systems) for contact paths to the prospective customer. Spring Boot-based micro-services with Kubernetes are used as container cluster manager in the back-end, while Angular (>2) is used in the front-end. In order to be able to release new versions of the application at a high frequency, a continuous delivery pipeline into the Google cloud was established, both for the test and the production environment.

Because we used Angular2, we chose the automation test tool Protractor. Protractor is based on Selenium and the WebDriver framework. As usual, the interface tests are done in the browser, simulating the behavior of a user using the application. Since Protractor was written directly for Angular, it can access all the Angular elements without limitation. Furthermore, additional functions for waiting for components such as “sleeps” or “waits” are not necessary because Protractor recognizes the state the components are in and whether they are available for the intended interaction.

How to

For the execution, you need AngularCLI and NodeJS. Then the interface tests (end-to-end or e2e) can be created in the project. To prepare for the local test run, you use the console to switch to the project directory and enter “ng serve”. After entering “ng e2e”, the test cases are then run on the localhost.

The end-to-end tests consist of type script files with the extension .e2e-spec.ts, .po.ts, or just .ts. The test cases are described in the .e2e-spec.ts files. Only tests contained in these files are executed. The following example shows the header of a .e2e-spec.ts file:

import { browser, by, ElementFinder } from 'protractor';

import { ResultPage } from './result-list.po';

import { CommonTabActions } from './common-tab-actions';

import { SearchPage } from './search.po';

import { AppPage } from './app.po';

import { CardPageObject } from './card.po';

import * as webdriver from 'selenium-webdriver';

import ModulePromise = webdriver.promise;

import Promise = webdriver.promise.Promise;

describe('Result list', function () {

let app: AppPage;

let result: ResultPage;

let common: CommonTabActions;

let search: SearchPage;

beforeEach(() => {

app = new AppPage();

result = new ResultPage();

common = new CommonTabActions();

search = new SearchPage();

result.navigateTo();

});

Like the other file types, it starts with the imports. Then the test cases start with describe. The string in brackets specifies which area is to be tested. Below that, the individual .po.ts files required for the subsequent tests are created and instantiated. The beforeEach function allows for preconditions for the test to be defined. For the purpose of reusability, the tests can also be exported to modules (see the following code example):

it('should display the correct background-image when accessing the page', require('./background'));

it('should send me to the impressum page', require('./impressum'));

it('should send me to the privacy-policy page', require('./privacy-policy'));

it('should open the search page after clicking clintr logo', require('./logo'));

The following code lists common e2e tests. First, it specifies what is expected, and then the test is executed. You should keep in mind that the e2e tests in the .e2e-spec.ts only calls the methods of the .po.ts and then waits for the result to be returned. The executing methods belong in the .po.ts.

it('should still show the elements of the searchbar', () => {

expect(result.isSearchFieldDisplayed()).toBe(true);

expect(result.isSearchButtonDisplayed()).toBe(true);

});

it('should show the correct Search Term', () => {

expect(result.getSearchTerm()).toBe(result.searchTerm);

});

The following code example shows the .po.ts relating to the .e2e-spec.ts above. Each .e2e-spec.ts does not necessarily have its own .po.ts or vice versa. A .po.ts can, for example, contain tab-related actions such as switch or close tabs. As long as a .e2e-spec.ts only uses methods from other .po.ts, it does not necessarily need its own .po.ts. As mentioned above, the .po.ts starts with the imports, and then the class (ResultPage in the example) is created.

When called up, the navigateTo method causes the test to navigate to the specified page. Since the test is not supposed to do this directly in the present case, it navigates to the Search page first. There, a search term is entered and the search is started. Thus, the test arrives at the result_list page where the tests are subsequently run.

import { element, by, ElementFinder, browser } from 'protractor';

import { SearchPage } from './search.po';

import * as webdriver from 'selenium-webdriver';

import { CardPageObject } from './card.po';

import ModulePromise = webdriver.promise;

import Promise = webdriver.promise.Promise;

export class ResultPage {

public searchTerm: string = 'test';

search: SearchPage;

navigateTo(): Promise<void> {

this.search = new SearchPage();

return this.search.navigateTo()

.then(() => this.search.setTextInSearchField(this.searchTerm))

.then(() => this.search.clickSearchButton());

}

Each of the following three methods queries an element of the page. The first two tests have a Union Type return value. This means they can either return a boolean or a Promise<boolean>, i.e. either a Boolean or a promise of a Boolean. When using Promise as the return value, it should always be followed by a then; otherwise, asynchronous errors may occur.

isSearchButtonDisplayed(): Promise<boolean> | boolean {

return element(by.name('searchInputField')).isDisplayed();

}

isSearchFieldDisplayed(): Promise<boolean> | boolean {

return element(by.name('searchButton')).isDisplayed();

}

getSearchTerm(): Promise<string> {

return element(by.name('searchInputField')).getAttribute('value');

}

Example

An implementation example of a test case in ClintR is the test of the link to the legal notice. First, it is supposed to click on the link. Then, the test is supposed to switch to the newly opened tab and confirm that the URL contains /legal-notice. Lastly, it is supposed to close the tab. This test was initially created only for the home page.

it('should send me to the impressum page',() => {

impressum.clickImpressumLink();

common.switchToAnotherTab(1);

expect(browser.getCurrentUrl()).toContain('/legal-notice');

common.closeSelectedTab(1);

})

As, according to the acceptance criteria, the legal notice has to be accessible from every subpage, the test was later adopted into all the other specs. To keep the code clear, it was decided to export this test into a module (impressum.ts).

import { browser } from 'protractor';

import { AppPage } from './app.po';

import { CommonTabActions } from './common-tab-actions';

module.exports = () => {

let common: CommonTabActions = new CommonTabActions();

new AppPage().clickImpressumLink().then(() => {

common.switchToAnotherTab(1);

expect(browser.getCurrentUrl()).toContain('/legal-notice');

common.closeSelectedTab(1);

});

};

It is used in the e2e-spec.ts this way:

it('should send me to the impressum page', require('./impressum'));

Particularities, notes & problems

Certain given functions can be written in each e2e-spec.ts, e.g. beforeEach, beforeAll or afterEach and afterAll. As the names suggest, the code contained in one of these functions is executed before or after each test or all the tests. In our example, each test should include its own page view. Accordingly, the navigateTo method can, for example, be written in the beforeEach function. afterEach can, for example, be used to close tabs that were opened during the tests.

Each test starts with the word it. If you add an x before this word, i.e. xit, this test will be skipped in the test run. But, contrary to a commented-out test, notice will be given that one or more tests have been skipped in the test run. If you write a test case with f, i.e. fit, only those tests starting with fit will be taken into account in the test run. This is expedient when you have a large number of test cases and only want to run some of them.

When working with Promise, which you get from some methods, you should keep in mind that incorrect handling can cause asynchronous errors. Many events, such as pressing a button or querying whether an element is displayed, return such a Promise. Even opening a page returns a Promise<void>. To avoid errors, each Promise that entails further actions such as pressing a button or outputting a resulting value should explicitly be followed by a then. For example:

pressButton().then( () => {

giveMeTheCreatedValue();

});

//If this value is again a Promise, which should trigger something, then the whole thing would look like this:

pressButton().then( () => {

giveMeTheCreatedValue().then( () => {

doSomethingElse();

});

});

// or slightly shorter

pressButton()

.then(giveMeTheCreatedValue)

.then(doSomethingElse);

Further information on Promises is available here.

Conclusion

Protractor is highly suitable for the automation of interface tests in a software development project using Angular2. The documentation on the project side is very detailed and comprehensive. Using Selenium allows the tests to be easily integrated into the build process.