Everybody knows what it’s like in a poorly run restaurant. At the table, everyone gets their food at different times, the schnitzel has the consistency of a shoe sole or we get something we didn’t order at all. The chef in the kitchen is completely overwhelmed and cannot cope with the flood of orders and constant changes to the recipe.

Software testing is not all that different. Let’s see a tester as the chef trying out a new recipe. This means that the new recipe is our test object, which is checked by the chef by means of cooking. The test team cannot keep up with the flood of changes. Tests are unnecessarily duplicated or forgotten again or overlooked. Errors are not detected and may then get incorporated during production. The chaos is perfect and the quality is poor. What to do in this case? Urge the chef on, automate the chaos, or simply hire more testers? No! Because, what would happen then?

Support and urge the staff to get things done?

Since the chef is already on the verge of collapsing due to the chaos, urging everyone on will only lead to short-term improvement followed by a knockout. This does not lead to long-term optimization of the situation of the situation.

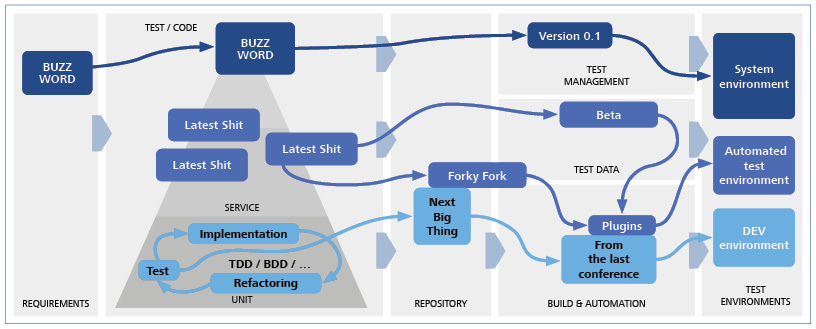

Automate the chaos (introduce test automation)?

Since there is an initial additional effort related to the automation and it is not clear where to start in this chaos, this would only result in even more chaos and overload in the kitchen. Reducing the quality even more.

Simply hire more staff?

As the saying goes: “Too many cooks spoil the broth.” So simply providing the chef with additional assistants does not necessarily mean that all issues are solved. It should not be underestimated here that the new staff must first be trained. This, in turn, can lead to delays in workflows. This definitely needs to be carefully planned, as it will otherwise result in even more kitchen chaos.

So what’s the solution?

First of all, we have to analyze why there is chaos in the kitchen and what causes it. It often turns out that there are bottlenecks in unexpected places. For example, a waiter writes the orders illegibly on a piece of paper rendering it unclear what was ordered for each table. This means that the chef (tester) has to constantly make inquiries about the orders. In this comparison, we consider the waiter as an analyst and the order placed by the waiter as a partial request. So even in the test (in the kitchen), the problems can already be present in the recorded requests and the tester must constantly ask what the request might mean.

Likewise, the chef might always first go look for the ingredients and start preparations only once the order is placed, i.e., the test person only creates the test cases when they get the finished product.

Also, it is important that communication in the kitchen runs smoothly. Not only in the kitchen, though, but also with the waiter, the patron and the creator of the recipe, communication must run smoothly. In the test, this means that communication must be ongoing not only with the test team, but also with the analyst, the product owner and the developer.

Another problem could be that the distance between stove and sink is too far. For our testers, this means that their test tool is simply too slow and takes too much time to create or perform a test case.

Consequently, the work process must be examined more closely:

- Starting situation

- Communication

- Work steps

- Tools used

- Documentation

- etc.

The analysis can be used to identify shortcomings and take appropriate measures. To put it briefly, this analysis with appropriate measures must become a regular process. That’s right: I’m talking about retrospective analysis at regular intervals. It is also important that a retrospective analysis of this kind not only identifies the issues, but also defines the measures to be implemented and reviewed in the next retrospective analysis. If only an analysis of problems is made and no measures are taken, then nothing will change.

Also with regard to test automation, it is important for work processes to be optimized or they will not be successful. In other words, the schnitzel turns black if cooked without oil, regardless of whether it is automated or not.

Unfortunately, there is no one-size-fits-all formula that works in every project. However, there are some “best practices” in the form of suggestions and as an impetus for improving the project. For an initial introduction to a regular improvement process, you are welcome to contact us and conduct the first retrospective analysis with one of our experienced consultants.

Please have a look at my other articles on test automation:

Recipes for Test Automation (Part 1) – Soup

Recipes for Test Automation (Part 2) – Data Salad

Recipes for Test Automation (Part 3) – What should a proper (test) recipe look like?