What is that?

A cyber-physical system (CPS) is used to control a physical-technical process and, for this purpose, combines electronics, complex software and network communication, e.g. via the Internet. One characteristic feature is that all elements make an inseparable contribution to the functioning of the system. For this reason, it would be wrong to consider any device with some software and a network connection to be a CPS.

Especially in manufacturing, CPS’ are often mechatronic systems, e.g. interconnected robots. Embedded systems form the core of these systems, are interconnected by networks and supplemented by central software systems, e.g. in the cloud.

Due to their interconnection, cyber-physical systems can also be used to automatically control infrastructures that are located far away from each other or a large number of locations. These could only be automated to a limited extent – until now. Some examples of this are decentrally controlled power grids, logistics processes and distributed production processes.

Thanks to their automation, digitalization and interconnection, CPS provide a high degree of flexibility and autonomy in manufacturing. This enables matrix production systems, which support a wide range of variants at large and small quantities [1].

So far, no standardized definition has been established, as the term is used broadly and non-specifically and is sometimes used to market utopian-futuristic concepts [2].

Where did this term originate?

In recent years, innovations in the fields of IT, network technology, electronics, etc. have made complex, automated and interconnected control systems possible. Academic disciplines such as control engineering and information technology offered no suitable concept for the new mix of technical processes, complex data and software. As a result, a new concept with a suitable name was needed.

The term is closely related to the Internet of Things (IoT). Moreover, cyber-physical systems make up the technical core of many innovations that bear the label “smart” in their name: Smart Home, Smart City, Smart Grid etc.

Features of CPS

As mentioned above, there is no generally recognized definition. But the following characteristics can be destilled from the multitude of definitions:

- At its core there is a physical or technical process.

- There are sensors and models to digitally record the status of the process.

- There is complex software to allow for a (partially) automatic decision to be made based on the status. While human intervention is possible, it is not absolutely required.

- There are technical means for implementing the selected decision.

- All elements of the system are interconnected in order to exchange information.

One CPS design model is the layer model according to [2]

Figure 1: Layer model for the internal structure of cyber-physical systems

Examples of cyber-physical systems

- Self-controlled manufacturing machines and processes (Smart Factory)

- Decentralized control of power generation and consumption (Smart Grids)

- Household automation (Smart Home)

- Traffic control in real time, via central or decentral control with traffic management systems or apps (element of the Smart City)

Example of an industrial cyber-physical system

This example shows a manufacturing machine that can operate largely autonomously thanks to software and interconnection, thereby minimizing idle times, downtimes and maintenance times. Let us assume that we are dealing with a machine tool for cutting as example.

Interconnected elements of the system:

- Machine tool with

- QR code camera for workpiece identification

- RFID reader for tool identification

- Automatic inventory monitoring

- Wear detection and maintenance prediction

- Central IT system for design data and tool parameters (CAM)

- MES/ERP system

The manufacturing machine of our example is capable of identifying the workpiece and the tool. The common technologies RFID or QR code can be used for this purpose. A central IT system manages design and specification data, e.g. a computer-aided manufacturing system (CAM) for CNC machines. The manufacturing machine retrieves all the data required for processing from the central system using the ID of workpiece and tool. As a result, there is no need to enter parameters manually as the data is processed digitally throughout. The identification allows the physical layer and data layer of a cyber-physical system to be linked.

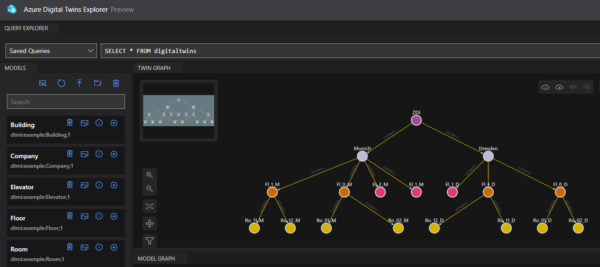

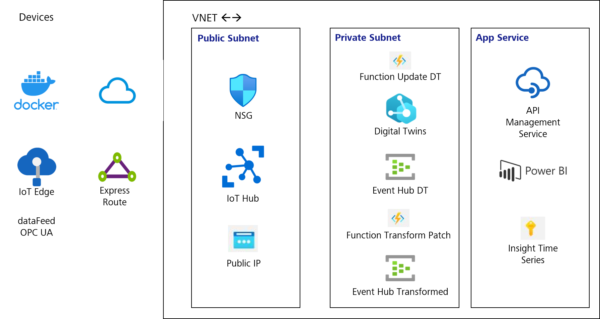

The digitized data for workpieces, machines and other manufacturing elements can be grouped under the term digital twin, which was presented in the blog article “Digital twins: a central pillar of Industry 4.0” by Marco Grafe.

The set-up tools and the material and resource inventories available in the machine are checked on the basis of the design and specification data. The machine notifies personnel if necessary. By performing this validation before processing begins, rejects can be avoided and utilization increased.

The machine monitors its status (in operation, idle, failure) and reports the status digitally to a central system that records utilization and other operating indicators. These types of status monitoring functions are typically integrated into a Manufacturing Execution System (MES) and are now in widespread use. In our example, the machine is also able to measure its own wear and tear in order to predict and report maintenance requirements, thereby increasing its autonomy. These functions are known as predictive maintenance. All these measures improve machine availability and make maintenance and work planning easier.

Through the use of electronics and software, our fictitious manufacturing machine is capable of working largely autonomously. The role of humans is reduced to feeding, set-up, troubleshooting and maintenance; humans only support the machine in the manufacturing process.

References

[1] Forschungsbeirat Industrie 4.0, „Expertise: Umsetzung von cyber-physischen Matrixproduktionssystemen,“ acatech – Deutsche Akademie der Technikwissenschaften, München, 2022.

[2] P. H. J. Nardelli, Cyber-physical systems: theory, methodology, and applications, Hoboken, New Jersey: Wiley, 2022.

[3] P. V. Krishna, V. Saritha und H. P. Sultana, Challenges, Opportunities, and Dimensions of Cyber-Physical Systems, Hershey, Pennsylvania: IGI Global, 2015.

[4] P. Marwedel, Eingebettete Systeme: Grundlagen Eingebetteter Systeme in Cyber-Physikalischen Systemen, Wiesbaden: Springer Vieweg, 2021.