Introduction

In the following three blog articles, I would like to present Appium to you: a test automation tool which was developed especially for testing mobile applications. Appium offers us the possibility to simulate specific mobile application scenarios e.g.: gesture control, SMS or incoming calls and to automate corresponding test cases. Apart from virtual devices, Appium offers us the possibility, as an additional feature, to perform automated test cases on real mobile devices.

Why automate on mobile devices?

But why should we run our test automation on real devices? Why not use the virtual devices from the development tools Xcode (iOS) and Android Studio (Android)? These questions are justifiable, because the acquisition of end devices generates additional costs.

The first argument for automation on real end devices may sound trivial but is of great significance: due to the fact that your users do not utilize any virtual devices.

One could assume that virtual devices reflect one-to-one real end devices. But this assumption is wrong. The main reason is that virtual devices don’t have any hardware of their own. They use the hardware of the computer on which they are installed. Experience shows also that errors which were found on a real end device could not always be replicated reliably on virtual devices.

In addition, automation on real end devices enables you to examine the performance of your application. Even if all the features of your application work perfectly, bad performance on the end device may result in your application being unusable. Tests on virtual devices provide us with no reliable data regarding this matter.

The problem of hardware and software fragmentation can also be seen as an argument for the automation on real end devices.

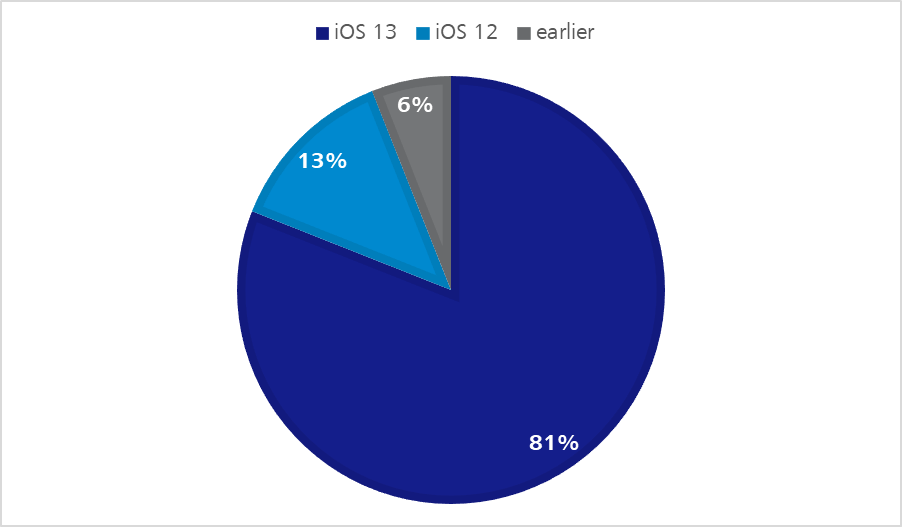

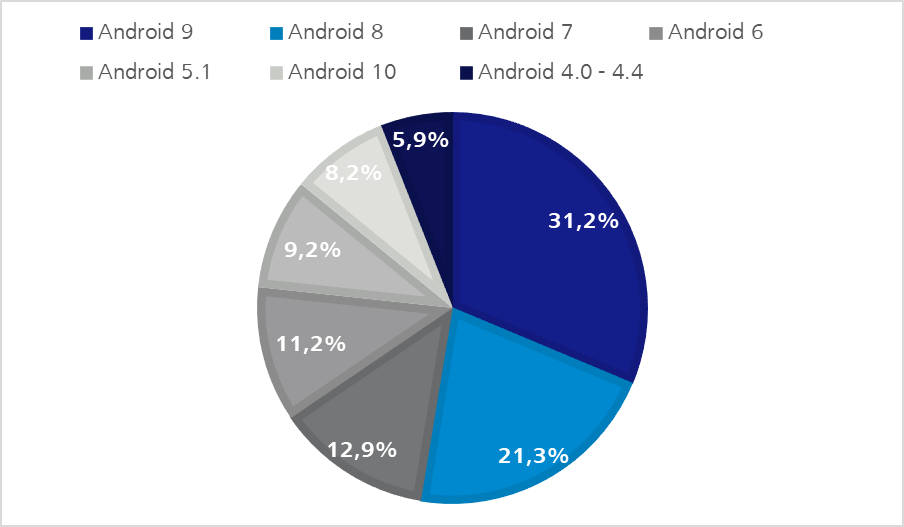

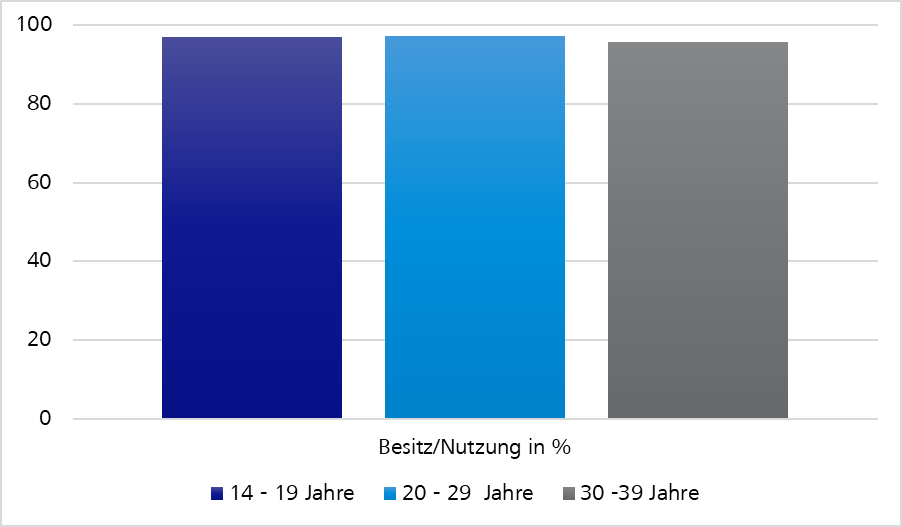

With regard to iOS as well as Android devices due to an ever-increasing product range, as well as operating system versions which remain longer and longer in circulation, a kind of natural fragmentation is developing – as shown in the following statistics.

Regarding Android devices we can observe another software fragmentation. The manufacturers are able to change the Android operating system within certain parameters. So system apps, like the virtual keyboard, can operate with varying results.

Let’s take the Gboard from Google and the virtual keyboard OneU from Samsung as examples. Both support swipe-control elements or the input of gestures, but they differ in the execution.

Google‘s virtual keyboard shows you the word, which is being formed while you glide over the keyboard. Whereas Samsung’s keyboard on the other hand shows you the word only when your fingers have stopped gliding.

One should not assume that the virtual devices from xCode or Android Studio simulate those differences.

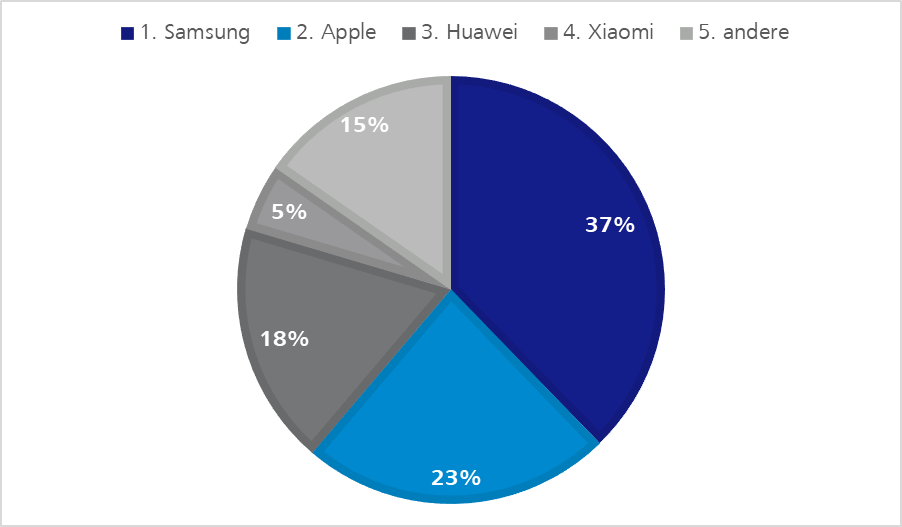

Of course, we cannot establish an infinitely large pool of mobile end devices. However, we can make a selection of devices which are strongly represented by their users.

End devices by Apple, Samsung and Huawei surely play a more decisive role in the pool than equipment constructed by other manufacturers as the following statistics show.

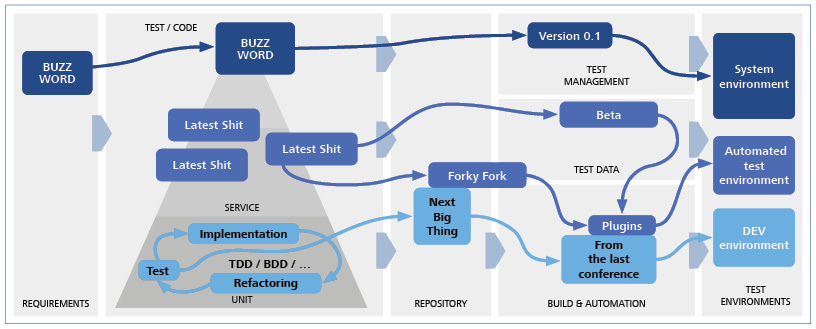

Issue – test automation tool fragmentation

Having gone into the advantages of test automation on real end devices, of course this still raises the fundamental question for projects with an existing test automation: Why should Appium be introduced as an additional test automation tool?

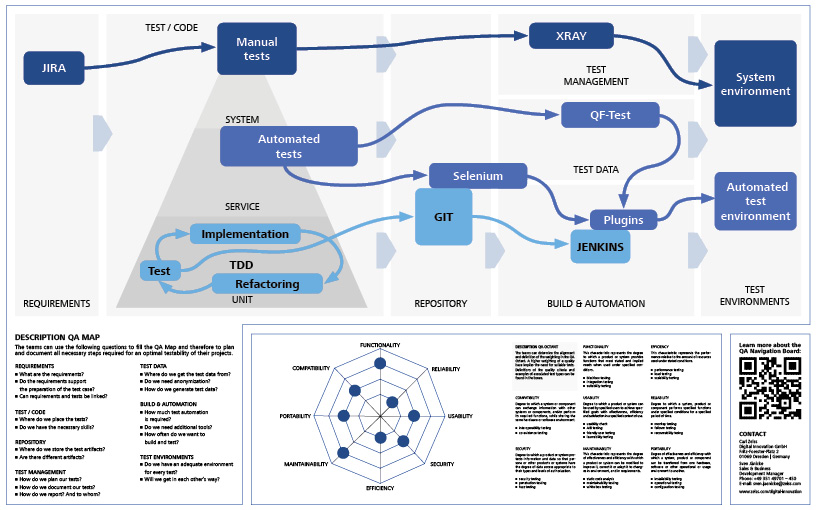

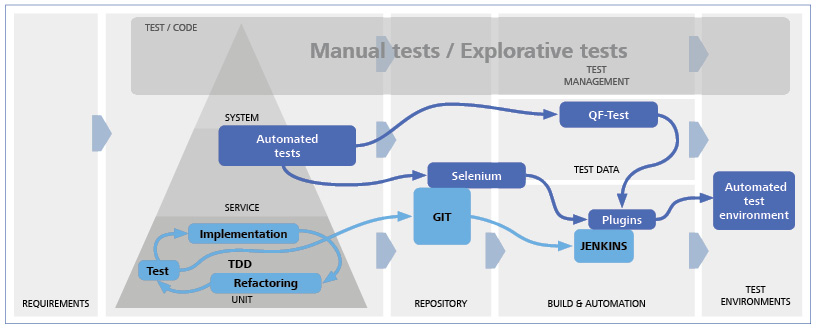

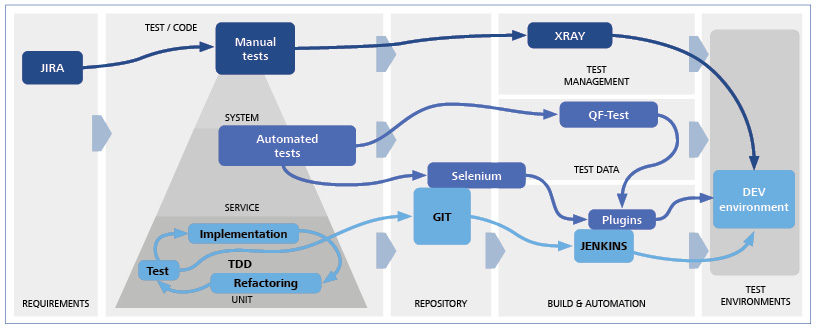

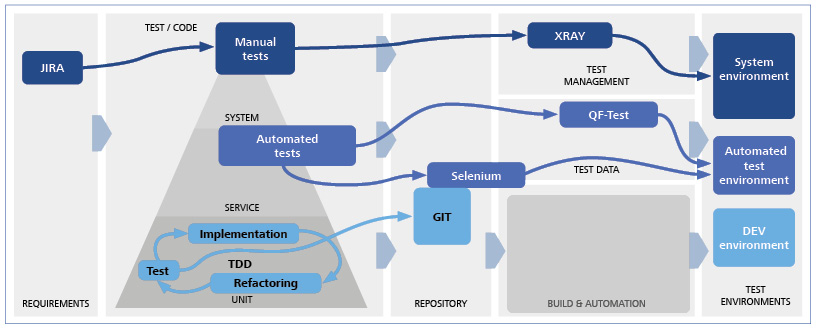

The problem of software fragmentation can be observed also in test case automation. There are more and more tools which support certain functions and settings, but are only conditionally compatible with each other. Ideally, we would like to utilize only one single test automation tool in order to reduce the obstacles of test case automation.

To answer the last question, let‘s start from the perspective of a multi-platform project.

Our application has been programmed as a desktop website, native iOS app and hybrid Android app. Additionally, we have created a responsive web app because your website already has good coverage of automated test cases via Selenium.

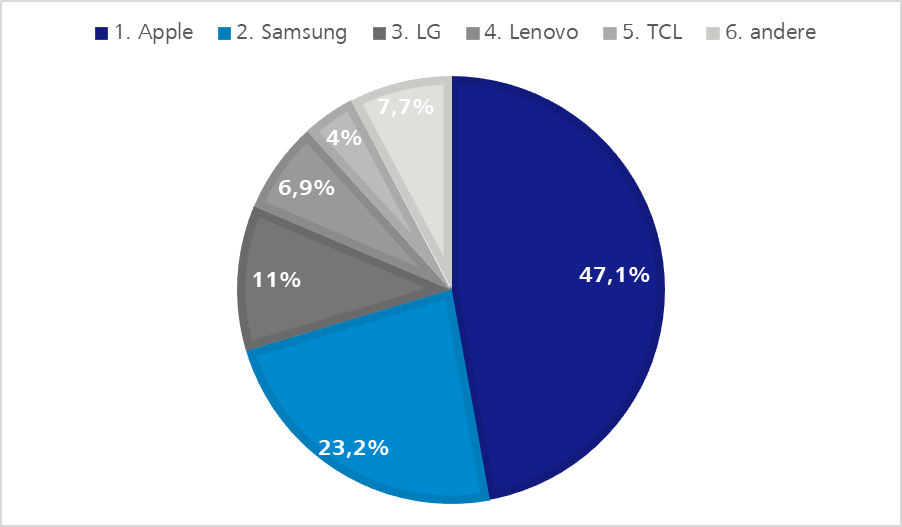

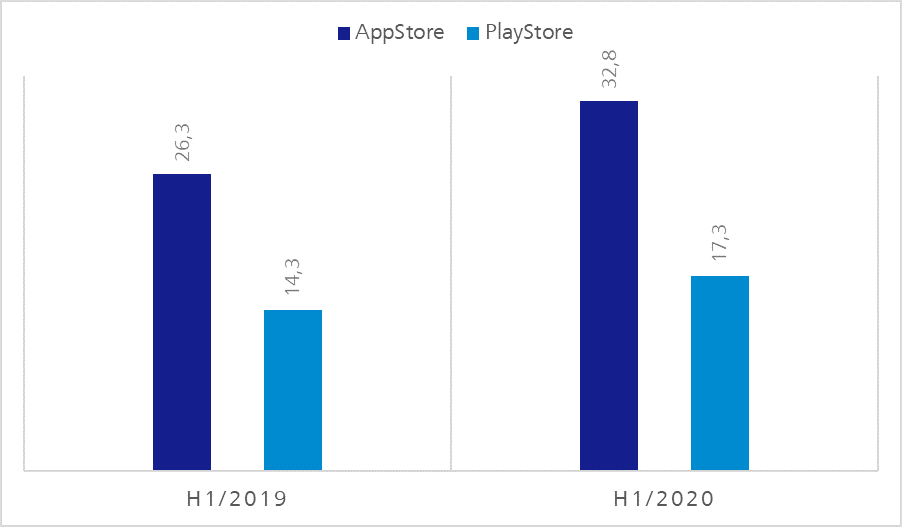

The following statistics indicate that test case automation which is merely limited to the website concerned is no longer sufficient for our multi-platform project.

We should assume that all relevant target groups also use our application on mobile end devices.

Appium vs. Selenium

A short look back to the origins of test automation tools shows why the introduction of further tools is useful regarding our example.

The first applications for which the test cases on end devices were automated were websites, amongst other things. Due to there being an increase of browsers the automation of test cases even in the front end area became necessary.

One of the most successful test automation tools in this area is Selenium. According to the origins, however, Selenium is geared towards the test case automation of websites. Mobile-specific use cases as gesture control are no longer being supported.

But let us assume that in our multi-platform project only a small number of users utilize the mobile applications. The majority use the desktop website and this has, as we know, a good automated test case coverage via Selenium.

Is the introduction of Appium still worthwhile?

Having briefly explained the problem of tool fragmentation, the introduction of Appium may possibly be connected more with costs rather than benefits. One could suppose our teams, which are experienced in Selenium automation, could automate the most important test cases with Selenium, and a few workarounds for our mobile applications. But let‘s take a closer look at Appium to check whether this claim is true.

Automation of mobile specific application scenarios using Appium

Let us first take a look at the problem of mobile specific application scenarios. Let’s contemplate use cases which Appium supports, where difficulties surely will occur for our test-automation experts using Selenium.

Gesture control

In our application a list exists whose end our users would like to reach. In the desktop-browser version, the users certainly utilize the mouse wheel, the scroll bar or the arrow keys on the keyboard for this. In the mobile applications, however, they will fall back on diverse gestures in order to reach the end of the list. They could put their finger on the lower screen area, hold it, pull it upwards and release it again just to move a certain part of the list.

Another possibility would be to place the finger at the bottom of the screen and with a quick upward-swipe gesture trigger an automatic scroll-down. For these cases we could fall back on TouchAPI by Appium.

Calls and SMS

Incoming calls and SMS have a much heavier impact on use of our application on mobile end devices. While on the desktop, the call usually only opens another window, the running application on mobile end devices is interrupted most of the time and the respective application for telephone calls is summoned to the foreground. Furthermore, incoming SMS usually trigger a notification via the current application. For these cases we can fall back on the phone-call API from Appium.

System applications

On mobile end devices our application probably comes much more often in contact with system applications. Be it the calendar, the photo gallery or the in-house map application. Appium also offers us at this point – regardless of which Appium driver we use – the possibility to integrate these system applications into our test automation.

Automation of hybrid apps

Now let’s take a look at the tool fragmentation issue in test case automation.

One part of the problem consists of the various types of development in mobile applications. In our example project the most common types are represented.

Let’s take a closer look at how Appium deals with the more complex hybrid applications via the Context API.

To find elements, or to interact with them, Appium assumes by default that all our commands refer to native UI-components which are displayed on the screen. Our test session is therefore still in the so-called Native Context.

If we use, for example, the Appium command getPageSource within the framework of a hybrid application, we will find in the output regarding web views only elements like <XCUIElementType…>. Important elements such as Anchor Tags or Divs are not shown to us at this stage.

So as long as we move in the Native Context all web views, or so-called Web Context are a black box for Appium. Although we are able to discern Web View UI-elements, and eventually even some buttons which for example iOS implies. However, it will not be possible to discern elements on the basis of CSS-Selectors.

To get better access to the Web Context, we need to transfer our Appium Session into the Web Context. This we can do by first discerning the name of the Web Contexts with the command driver.getContextHandles. This displays an array of all context names which Appium has created to assign them to the available Context. In our case the output is a Web Context called WebView1 and a Native Context called NativeElement1.

Now to transfer our Appium session into the Web Context we use the command driver.setContext(WebView1). When this command has been executed Appium uses the Context environment which corresponds to the specified Context.

All other commands now operate within the Web Context and relate to WebView1. To be able to address again the native element we use the same command once more with the name of the Native Context which we would like to address. So in our case: driver.setContext(NativeElement1). If we would like to find out in which Context we actually are we could use the following command: String currentContext = driver.getContext();

After briefly discussing the Context API from Appium, let’s take a look at how it works.

On iOS, Appium uses the so-called „remote Debugger Protocol“ which is supported by Safari. This „remote Debugger Protocol“ enables us to receive information about the sites displayed in Safari, or to control browsing behavior. One method we can fall back on is the possibility to paste the current website into JavaScript.

Appium uses this function to perform all commands available in the WebDriver API.

Support of code languages

Appium allows you to write tests in different code languages. This is an advantage of the client-server model. The Appium development team is able to implement all Appium functions in only one server code base, which is written in JavaScript (Appium Server = NodeJS platform). Nevertheless, users who write codes in another programming languages are able to get access to these functions. The access takes place via the Appium client libraries, which Appium provides us with. If, for example, we would like to write our automatic tests in Java, we need to integrate the corresponding Appium Java Libraries into our Appium Client.

Appium client server model

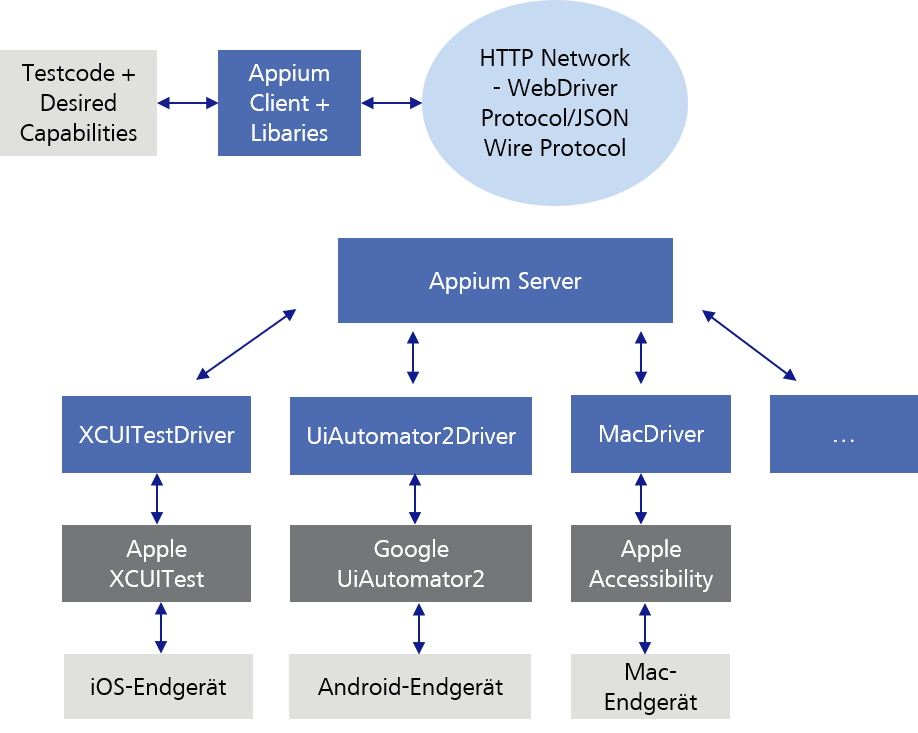

As already described, we send our test code (commands/requests) via the Appium Client with the corresponding libraries to the Appium server. As Appium Client for example the developer tool Eclipse can be used. The Appium Server again sends our test code (commands/requests) to the mobile end device, on which this is then executed. But let’s go into more detail.

In order that the Appium Server can interpret the Appium Client test code (commands/requests), it uses the WebDriver Protocol, or the older JSON Wire Protocol, which convert our test code to a HTTP RESTful request.

Afterwards, the Appium Server sends our test code depending on which end device we would like to address to the platform-specific test framework which in turn executes the test code on the end device. At this point, the Appium Server is able to communicate with the different test frameworks.

In order that the Appium Server can decide with which of those platform-specific test frameworks, or with which end device it should communicate, our test code has to be sent along with the so-called „Desired Capabilities“ as JSON Object to the Appium Server. In the Desired Capabilities, for example, we specify the device name, the platform (iOS, Android…) and the platform version.

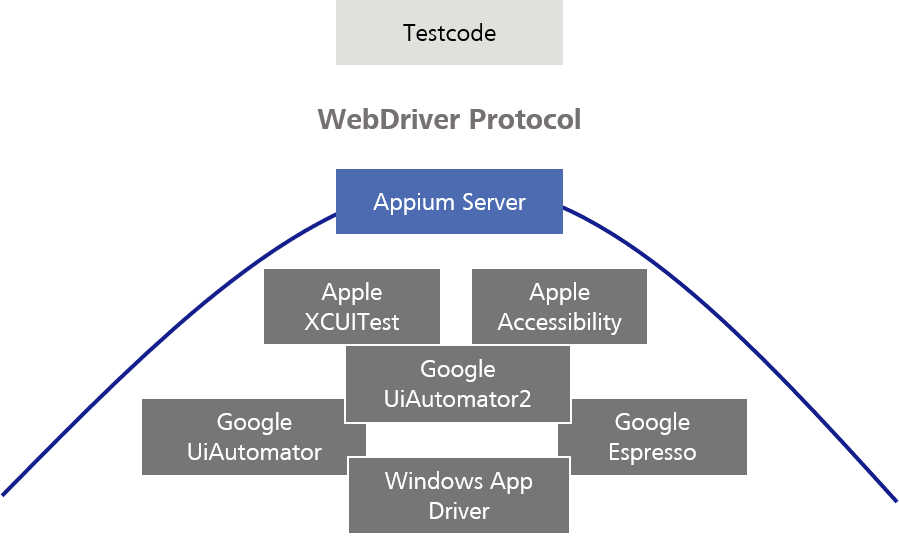

There is not necessarily only one test framework per platform. For example, under Android there are three different automation technologies by Google. The oldest one, UiAutomator, was replaced by UiAutomator2. UiAutomator2 has added a variety of new automation functions.

The latest test framework is called Espresso, and works with a totally different model than UiAutomator2. However, it offers much greater stability and test speed.

You can instruct your Appium tests to refer to one of those test frameworks, based on their specific functions and the platform support.

Theoretically, you could also use the test frameworks directly. However, Appium offers a practical setting for the different test frameworks, providing them with the same WebDriver Protocol and tries to balance behavioral differences between various test frameworks.

When new test frameworks appear, the Appium team can create a communication protocol (Driver) for them so you can access these without having to rewrite all your test scripts. This is the strength of using a standard protocol and the Client-Server-Architecture.

It also enables cross-platform automation. Instead of learning two different test frameworks in two different languages, in most cases you could write one Appium-Script and run it on different platforms.

Whoever uses Appium does not need to know much about these underlying test frameworks, because they only deal with the Appium API and for example, do not need to write an XCUI test or an Espresso test.

Summary

In summary, we can say: Appium is a tool for automation of mobile applications which was inspired by Selenium. In fact, Appium tests are based on the same protocol as Selenium tests. Selenium offers their users the possibility to control web browsers. For historical reasons, it is sometimes called „WebDriver“ or „Selenium/WebDriver“.

As you may have already recognized by the name Appium, it was designed to be as compatible as possible with Selenium. Appium adopted the same protocol as Selenium, so that Appium and Selenium tests mostly look the same and „feel” the same. In fact, the Appium Client Libraries were constructed using Selenium Client Libraries as its basis.

But there was a problem: The Selenium Protocol was only developed for the automation of web browsers. Therefore, Appium had to add commands to the protocol to enable mobile-specific automation. This means that Appium commands are an extension of Selenium ones.

The previously made claim, that the introduction of Appium in our example project, would not be useful due to the cost-benefit factor is therefore wrong. It can even be assumed, that besides an improved coverage regarding the test automation, the introduction could also contribute to an improvement of the process.

I hope you have enjoyed this short excursion into the world of test automation and the technical background of Appium. In my second blog regarding Appium, I will show you how to set it up. In addition, I will demonstrate, using specific code examples as a basis, what we are able to achieve using Appium in our multi-platform project. There we will discuss the already addressed cases. I would be happy to welcome you to my next post in this blog series.

Until then, happy testing.