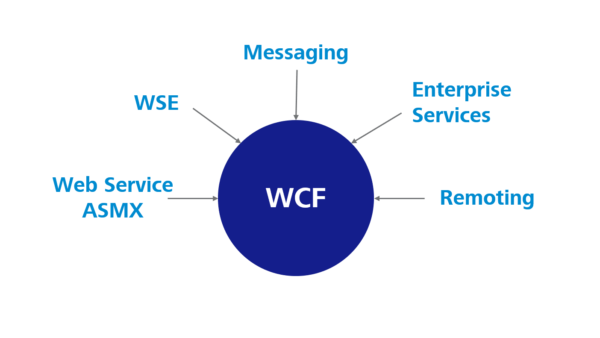

This blog post in the series on alternatives for the Windows Communication Foundation (WCF) describes the particularities and challenges regarding a WCF migration in preparation of the subsequent porting of the application to .NET Core.

The previous post described ASP.NET Core Web API as an alternative, and this article will address another option: gRPC. Again, we will describe a possible step-by-step procedure for the migration from WCF to gRPC.

Migration procedure

Usually, a separate WCF project is in the solution. As a direct conversion is not possible, this project can remain unchanged in the solution for the time being.

You should first create a new class library project for shared objects between the server and the client. Then copy the ServiceContract interfaces and the DataContract classes from the WCF project to this project, and remove the WCF-specific attributes such as “ServiceContract”, “OperationContract”, “DataContract”, “DataMember”, etc.

Client project

First of all, remove the WCF Service reference in the project that consumes the WCF Service. The WCF-specific attributes such as “CallbackBehavior” and the like can be removed as well.

Add a new reference to the previously created class library project for the shared objects. Next, you can create an empty implementation of the ServiceContract interface, which is now located in the class library project, in the client project. Now change the “old” initialization of the WCF Service to the, as-yet empty, implementation of the ServiceContract.

Lastly, you have to change the usings for the previously used DataContract classes from the WCF Service to the new class library project. It should now be possible to compile the client project again. In order to be able to start the project again, you have to remove the <system.serviceModel> element from the *.config.

Creating the interface description with Protocol Buffers

With gRPC, the interface is described in *.proto files using the Protocol Buffer language. The *.proto file should preferably be added to the newly created class library project. In order to be able to generate server and client classes from it later, you also have to add the “Google.Protobuf”, “Grpc.Core” and “Grpc.Tools” NuGet packages.

Once the *.proto file has been created, it has to be announced in the “ItemGroup” node in the *.csproj file by means of the following line.

<Project Sdk="Microsoft.NET.Sdk">

<ItemGroup>

<Protobuf Include="ProtoTimeService.proto" GrpcServices="Both" />

</ItemGroup>

</Project>

Definition of *.proto in the *.config file

Structure of the *.proto file

Below is an example of how to transfer a WCF Service description into a *.proto file.

The [ServiceContract] attributes become “Service”, and [OperationContract] become “rpc” calls. The classes labeled [DataContract] become “message” objects.

[ServiceContract]

public interface IDataInputService

{

[OperationContract]

int CreateUser(User user);

[OperationContract]

int Login(User user);

[OperationContract]

List<Time> GetTimes(int userId);

[OperationContract]

void AddTime(Time time, int userId);

[OperationContract]

List<string> Projects();

}

[DataContract]

public class User

{

[DataMember]

public string Name { get; set; }

[DataMember]

public string Passwort { get; set; }

}

[DataContract]

public class Time

{

[DataMember]

public DateTime Start { get; set; }

[DataMember]

public DateTime End { get; set; }

[DataMember]

public string Project { get; set; }

[DataMember]

public int uId { get; set; }

[DataMember]

public int Id { get; set; }

}

Example of a WCF ServiceContract and DataContract to be migrated

syntax = "proto3";

import "google/protobuf/timestamp.proto";

import "google/protobuf/Empty.proto";

option csharp_namespace = "DataInputt.TimeService.Api";

service DataInputService {

rpc CreateUser (UserDto) returns (UserResponse) {}

rpc Login (UserDto) returns (UserResponse) {}

rpc GetTimes (GetTimesRequest) returns (TimeCollection) {}

rpc AddTime (AddTimeRequest) returns (google.protobuf.Empty) {}

rpc Projects (google.protobuf.Empty) returns (ProjectCollection) {}

}

message UserDto {

string name = 1;

string passwort = 2;

}

message TimeDto {

google.protobuf.Timestamp start = 1;

google.protobuf.Timestamp end = 2;

string project = 3;

int32 uid = 4;

int32 id = 5;

}

message UserResponse {

int32 id = 1;

}

message GetTimesRequest {

int32 userId = 1;

}

message TimeCollection {

repeated TimeDto times = 1;

}

message AddTimeRequest {

TimeDto time = 1;

int32 userId = 2;

}

message ProjectCollection {

repeated string projects = 1;

}

Example of the created gRPC *.proto file

When you create the *.proto file, you should observe the following points.

Indicate the namespace

To ensure that the generated server and client implementation is given the correct namespace, it should be indicated in the *.proto file.

Definition of input parameters / return values

gRPC interfaces only allow for calls with a single parameter. If you work with several input parameters in the WCF Service, they have to be combined into a new message object.

Every call of a gRPC interface needs a return value as well. If there were void methods in the WCF Service, they have to return the specific “google.protobuf.Empty” type in gRPC now.

Furthermore, using a single primitive data type (int, bool, string) for the input and return is not allowed. If only an int or string is to be used for the return value, another message object has to be created for this purpose.

If methods call up one another in the WCF Service, this was very simple if a primitive data type was used. If you want this to be possible in the gRPC interface as well, you have to ensure that the respective methods use the same message objects. This way, you can avoid unnecessary mapping.

Names of the message objects

When you name the message objects, you should not use the exact same names as the DataContract classes of the WCF Service. This is important because some of the C# classes that will later be generated from the definition use different data types and need to be mapped before they can be used. In order to distinguish them more clearly from the DataContract classes, it is advisable to use distinctive names.

Furthermore, the properties within the message objects have to be numbered sequentially.

Data types in message objects

C# classes are automatically generated from the message objects of the *.proto file. You should be aware that the standard C# data types are not always used for the generation.

For example, the google.protobuf.Timestamp type specified in the *.proto file becomes the Google.Protobuf.WellKnownTypes.Timestamp type in the C# class and has to be converted into a DateTime first whenever it is to be used.

If the *.proto file contains “repeated”, this does not become a List<T>, but a Google.Protobuf.Collections.RepeatedField<T>, which needs to be mapped accordingly as well.

Other types such as Dictionary<K, V> also have different types in the *.proto file and the C# class generated later. The C# type “decimal” is currently not supported by the *.proto file at all because of insufficient rounding accuracy. As a workaround, creating your own decimal message object that specifies the pre-decimal and the decimal places as separate int values is recommended.

Creating the gRPC server project

The gRPC server project can be created as a simple console application, and it should contain a reference to the previously newly created class library project with the *.proto file.

To start the server, you only need a few lines of code:

static void Main(string[] args)

{

const int port = 9000;

const string host = "0.0.0.0";

Grpc.Core.Server server = new Grpc.Core.Server

{

Services = { DataInputt.TimeService.Api.TimeService.BindService(new TimeService()) },

Ports = { new Grpc.Core.ServerPort(host, port, Grpc.Core.ServerCredentials.Insecure) }

};

server.Start();

Console.WriteLine($"Starting server {host}:{port}");

Console.WriteLine("Press any key to stop...");

Console.ReadKey();

}

Example for starting a gRPC server

You only have to define the host and port and assign an implementation to the service generated with the *.proto file in the class library project. The implementation should be located in the gRPC server project.

Implementation of the gRPC service

The gRPC service is implemented by way of the inheritance of the ServiceBase generated with the *.proto file in the class library project. The individual service calls can then be implemented by means of an override.

public class TimeService : DataInputt.TimeService.Api.TimeService.TimeServiceBase

{

public override Task<UserResponse> CreateUser(UserDto request, ServerCallContext context)

{

}

public override Task<UserResponse> Login(UserDto request, ServerCallContext context)

{

}

public override Task<TimeCollection> GetTimes(GetTimesRequest request, ServerCallContext context)

{

}

public override Task<Empty> AddTime(AddTimeRequest request, ServerCallContext context)

{

}

public override Task<ProjectCollection> Projects(Empty request, ServerCallContext context)

{

}

}

Example of the server implementation of a gRPC service

If the service implementation uses the “old” WCF code, it may be necessary to map the parameters if the data types do not match the “old” DataContract classes.

Furthermore, it is important to know that the lifecycle of the service implementation extends over the entire runtime of the gRPC service (singleton). Contrary to a Web API Controller, gRPC does not create a new instance of the service implementation for each request. Consequently, the state of the gRPC service is preserved between the calls. Therefore, class variables and resources created in the constructor or injected should preferably be avoided because it may not be possible to secure their state in between calls.

Implementation of the gRPC client

You can use the empty implementation of the ServiceContract interface created in the consuming project for the implementation of the gRPC client. First, you have to establish a connection to the gRPC server.

const int port = 9000;

string host = Environment.MachineName;

var channel = new Channel(host, port, ChannelCredentials.Insecure);

var grpcClient = new TimeService.Api.TimeService.TimeServiceClient(channel);

Example of a client for establishing the connection to the gRPC server

Again, a client class generated with the *.proto file in the class library project is used for this purpose. It provides the calls defined in the *.proto file.

Now the corresponding calls of the gRPC client class have to be added to the empty implementation of the ServiceContract interface. It may be necessary to map the input parameters and return values used by the gRPC service to the previous DataContract classes.

Because of the use and implementation of the “old” WCF Service interface, there is no need to adjust or change anything else in the consuming project.

Bidirectional communication

The concept of bidirectional communication in gRPC is very different from WCF Duplex Services.

With WCF, the server can easily call up various methods on the client side via callback interfaces. With gRPC, on the other hand, a server method is called by the client, which then returns data to the client by way of a stream.

For this purpose, the gRPC server method has to be implemented in such a way that it is not terminated, maintaining the connection. The transmission of data to the client can then be triggered e.g. by events.

After calling the server method, the client also has to maintain the connection and respond to the receipt of new data.

Consequently, the conversion to gRPC streaming requires some very fundamental, conceptual adjustments.

Cross-cutting concerns such as authentication, authorization, logging and error handling of the gRPC calls have not been considered. These issues should be checked and adjusted as required in each individual case.

Conclusion

Compared to ASP.NET Core Web API, the conversion from WCF to gRPC requires much more work and more adjustments in the code. Firstly, you have to create a *.proto file. Because of the requirement that each service call must have a return value and that only a single input parameter is permitted, some adjustments of the method signatures are necessary. As some of the generated classes do not use .NET standard types, each server and client method has to be completed with the respective mapping code.

Furthermore, when you use gRPC, it is imperative to know that the lifecycle of the service instance extends over the entire runtime of the gRPC server (singleton).