With the serverless model, application components such as databases or data processing components are provided and operated, automated and demand-based, by the cloud service provider. The cloud user is responsible for configuring these resources, e.g. with their own code or application-specific parameters, and combining them.

The costs incurred depend on the capacities used, and scaling takes place automatically based on the load. The cloud service provider is responsible for the provision, scaling, maintenance, high availability and management of the resources.

Serverless computing is particularly convenient for workloads that are difficult to anticipate or are short-lived, for automation tasks, or for prototypes. Serverless computing is less suitable for resource-intensive, long-term, and predictable tasks because in this case, the costs can be significantly higher than with self-managed execution environments.

Building blocks

Within the framework of a “serverless computing” Advent calendar, we compared the cloud services of AWS and Azure. The windows open under the hashtag #ZEISSDigitalInnovationGoesServerless.

| Category | AWS | Azure |

|---|---|---|

| COMPUTE Serverless Function | AWS Lambda | Azure Functions |

| COMPUTE Serverless Containers | AWS Fargate Amazon ECS/EKS | Azure Container Instances / AKS |

| INTEGRATION API Management | Amazon API Gateway | Azure API Management |

| INTEGRATION Pub-/Sub-Messaging | Amazon SNS | Azure Event Grid |

| INTEGRATION Message Queues | Amazon SQS | Azure Service Bus |

| INTEGRATION Workflow Engine | AWS Step Functions | Azure Logic App |

| INTEGRATION GraphQL API | AWS AppSync | Azure Functions mit Apollo Server |

| STORAGE Object Storage | Amazon S3 | Azure Storage Account |

| DATA NoSQL-Datenbank | Amazon DynamoDB | Azure Table Storage |

| DATA Storage Query Service | Amazon Aurora Serverless | Azure SQL Database Serverless |

| SECURITY Identity Provider | Amazon Cognito | Azure Active Directory B2C |

| SECURITY Key Management | AWS KMS | Azure Key Vault |

| SECURITY Web Application Firewall | AWS WAF | Azure Web Application Firewall |

| NETWORK Content Delivery Network | Amazon CloudFront | Azure CDN |

| NETWORK Load Balancer | Application Load Balancer | Azure Application Gateway |

| NETWORK Domain Name Service | Amazon Route 53 | Azure DNS |

| ANALYTICS Data Stream | Amazon Kinesis | Analytics |

| ANALYTICS ETL Service | AWS Glue | Azure Data Factory |

| ANALYTICS Storage Query Service | Amazon Athena | Azure Data Lake Analytics |

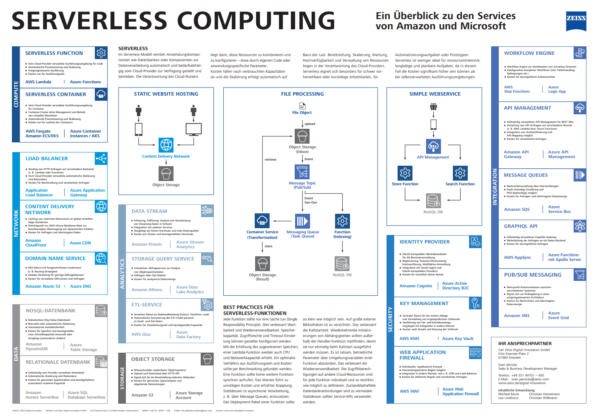

We compiled an overview of the above-mentioned services and their characteristics, including some exemplary reference architectures on a poster (english version follows). This overview offers a simple introduction to the topic of serverless architecture.

We will gladly send you the poster in original size (1000 x 700 mm). Simply send us an e-mail with your address to info.digitalinnovation@zeiss.com. Please note our privacy policy.

Best practices for serverless functions

Each function should be responsible for a single task (single responsibility principle): this improves maintainability and reusability. Storage capacity, access rights and timeout settings can be configured more specifically.

As the allotted storage space of a Lambda function is increased, the capacity of the CPU and the network increases as well. The optimal ratio between execution time and costs should be determined by way of benchmarking.

A function should not call up another synchronous function. The wait causes unnecessary costs and increased coupling. Instead, you should use asynchronous processing, e.g. with message queues.

The deployment package of each function should be as small as possible. Large external libraries are to be avoided. This improves the cold start time. Recurring initializations of dependencies should be executed outside of the handler function so that they have to be executed only once upon the cold start. It is advisable to define operative parameters by means of a function’s environment variables. This improves the reusability.

The rights to access other cloud resources should be defined individually for each function and as restrictively as possible. Stateful database connections are to be avoided. Instead, you should use service APIs.