In today’s digital world, the ability to manage data efficiently and effectively is critical to the success of any industrial data platform. A key part of this process is data ingestion. But what exactly does data ingestion mean? Simply put, it is the process of collecting data from multiple sources, transferring it to a central data platform, and storing it there for further processing and analysis. Data ingestion plays a particularly important role in the manufacturing industry, where large amounts of data from different sources often have to be combined. Such data may include, for example, information on production processes, machine states, supply chains and quality controls. By analyzing this data, companies gain valuable insights into production bottlenecks, machine performance, and product quality, resulting in optimizations in production process efficiency and cost reduction.

An industrial data platform is made up of several components, with the storage of the data having a decisive function. The architecture of such a platform should be designed to enable efficient recording, storage and processing of data. This is particularly important to ensure platform performance and scalability.

The manufacturing industry faces specific challenges when it comes to data acquisition. On the one hand, data volumes are often very high, since machines and sensors continuously generate data. On the other hand, data comes from different sources and in different formats, making integration difficult. In addition, there are real-time requirements, since many manufacturing applications require immediate processing of data to optimize production processes or avoid downtime.

The goal of this article is to provide a comprehensive overview of the topic of data ingestion in industrial data platforms. In the first part, we will explain different methods and techniques of data acquisition and discuss concepts of data storage, pointing out the advantages and disadvantages of the various approaches. In the second part, we discuss common challenges and present possible solutions. We attach particular importance to explaining the concepts in an easy-to-understand manner and to illustrating them with concrete examples of application.

Types of data sources in the manufacturing industry

In the manufacturing industry, there are a variety of data sources that are used for optimizing and monitoring production processes. The most important are:

- Sensor and machine data: Machines and production plants are often equipped with sensors that continuously collect data on temperature, pressure, vibrations and other operating parameters. In addition, the machines themselves also generate important data, such as operating states and error messages. Both the sensor data and the machine-specific data are crucial for predictive maintenance and the optimization of the machine power. Since in many cases the sensor data is not directly accessible, access is often realized via the machine level, for example via a programmable logic controller (PLC).

- Supervisory Control and Data Acquisition (SCADA) systems: SCADA systems monitor and control industrial processes at a higher level. They collect data from various sensors and control units and enable remote monitoring and control of production plants.

- MES Systems (Manufacturing Execution Systems): MES systems monitor and control production processes in real time. They collect data on production orders, machine utilization and production quality.

- Enterprise Resource Planning (ERP) systems: ERP systems manage business processes such as procurement, production, sales and human resources. They provide valuable data on material flows, production plans and inventory management.

- Quality control systems: These systems collect data on the quality of the goods produced. They help to identify quality problems at an early stage and to take measures to improve product quality.

- External data sources: Environmental and weather data can also play an important role, especially in sectors heavily influenced by external conditions. This data can be used to adapt production processes to changing environmental conditions.

Data formats

Data sources used in the manufacturing industry provide data in various formats. Some of the most common formats include:

- CSV (Comma-Separated Values): A simple text format commonly used for tabular data. CSV is easy to create and process with Microsoft Excel.

- XML (Extensible Markup Language): A widely used data format in industrial machine integration, supplemented by XML Schema Definition (XSD) to precisely define the structure and assess the validity of the data. While newer formats such as JSON are gaining in importance, XML remains an important part of many industrial applications because of its robustness and compatibility with existing infrastructure.

- JSON (JavaScript Object Notation): A widely used format for structured data that can be easily read by machines and humans.

- OPC UA (Open Platform Communications Unified Architecture): A platform-independent communication protocol designed specifically for industrial automation. Standardized semantic data models in the form of companion specifications are particularly useful here.

- Images: In the manufacturing industry image data is often used, for example from cameras for quality control or for monitoring production processes. This image data can provide valuable information that helps optimize production processes.

- Documents: These include formats such as PDF, which often contain technical specifications, manuals or reports. PDF documents can also embed XML data containing structured information to facilitate data analysis.

- Proprietary formats: Many machines and systems use vendor-specific data formats, which are often tailored specifically to the requirements of the respective application.

Data volume and speed

In the manufacturing industry, large amounts of data are generated at different frequencies and speeds. Typically, sensor data can be collected in real time or at very short intervals (milliseconds to seconds), resulting in a high data volume. ERP and MES systems normally provide data at longer intervals (minutes to hours), while quality control systems can vary depending on the production cycle.

The speed at which this data is transmitted to the central data platform depends on the type of data source and the specific requirements of the application. Real-time data often needs to be processed immediately, while other data can be collected and transferred at regular intervals.

For more information on typical use cases by latency, see the white paper Industrial Data Platform.

Data access options

There are several technical ways to access data sources in the manufacturing industry:

- Log files: In a production plant, machines log error events and operating times in log files. These log files can be read regularly to monitor the condition of the machines and analyze errors.

- File Access: A manufacturing company can store sensor data from production lines in CSV files. These files are then stored in a central network store where they can be retrieved and analyzed by data analysts to identify production patterns. Other applications for file access are image data or quality assurance check reports.

- SQL (Structured Query Language): A production database can contain information about inventories, production schedules, and supply chains. Engineers and data analysts can use SQL queries to selectively retrieve data and generate specific reports from it, for example.

- CDC (Change Data Capture): This technique captures changes in databases as they arise and transmits them to the central data platform. This is of particular interest for applications in which changes are to be reacted very quickly (in quasi-real time). This allows close monitoring of process parameters and the timely initiation of countermeasures in the event of detected deviations.

- API (Application Programming Interface): Many systems provide APIs that can be used to retrieve data programmatically. One area of application is the integration of a production planning system with an ERP system in order to synchronize production plans and material requirements automatically.

- Messaging: In a networked manufacturing environment, an MQTT-based system can be used to send sensor data from different machines to a central control unit. This enables real-time monitoring and control of production processes, which is particularly important in Industry 4.0 applications. Apache Kafka as a data stream storage and processing solution can be used to process and analyze large amounts of data from IoT devices. It can thus be used to optimize production processes.

- Best Practices: To minimize the burden on the primary data source, it may be useful to use Read Replicas. These copies of the database can be used for read access without affecting the performance of the main database.

By combining these different data sources, formats, and access options, an industrial data platform can provide valuable insights into production processes and help optimize the overall manufacturing process.

Data storage in the platform: The concept of a data lakehouse

In today’s data architecture, the Data Lakehouse concept has emerged as a promising solution that combines the advantages of Data Lakes and Data Warehouse. A data lakehouse allows large amounts of structured and unstructured data to be stored and processed in a unified system, making it easier for companies to gain valuable insights from their data.

Data storage formats

A key feature of Data Lakehouse is the use of efficient storage formats that enable quick retrieval and analysis of data. Some of the most common formats include:

- Parquet: A column-based storage format optimized for storing large amounts of data. Parquet enables efficient data compression and encoding, reducing storage costs and increasing query speed.

- Delta Lake: An open-source storage solution built on the Apache Parquet format that supports ACID transactions. Delta Lake enables you to store data in a data lake while taking advantage of a data warehouse by providing structured query and data integrity.

- Apache Iceberg: Another open-source project that provides a flexible and powerful solution for managing large amounts of data in Data Lakehouses. Iceberg supports complex data queries and enables easy management of data versions.

Comparing Data Warehouse and Data Lakehouse

Data warehouses are designed to store structured data in fixed schemas and provide powerful query capabilities for business intelligence and reporting. They are ideal for organizations that need consistent, structured data to produce historical analyses and reports. However, they can be expensive and less flexible when it comes to storing and processing unstructured data.

Data lakehouses, on the other hand, utilize cost-effective object storage services such as Amazon S3 or Azure Blob Storage. This feature is borrowed from data lakes, but the aforementioned storage formats also allow structured data to be stored in them. Such storage solutions offer virtually unlimited scalability and flexibility, making it possible to store large amounts of data without having to worry about infrastructure. Using object storage not only reduces data storage costs but also enables the integration of data from various sources and its processing with modern analytics tools, which is particularly beneficial for data-intensive applications such as machine learning and real-time analytics. Data lakehouses add a layer of structure and performance optimization on top of the data lake, creating a hybrid architecture that combines the strengths of both data lakes and data warehouses.

Unlike traditional data warehouses, data storage and processing can be scaled independently of each other in a Data Lakehouse. Especially by providing compute capacity in a timely and on-demand manner, the benefits of the cloud can be used effectively. Very large amounts of data can thus be processed in a shorter time. Conversely, the uncomplicated release of these resources leads to cost savings in times of reduced demand.

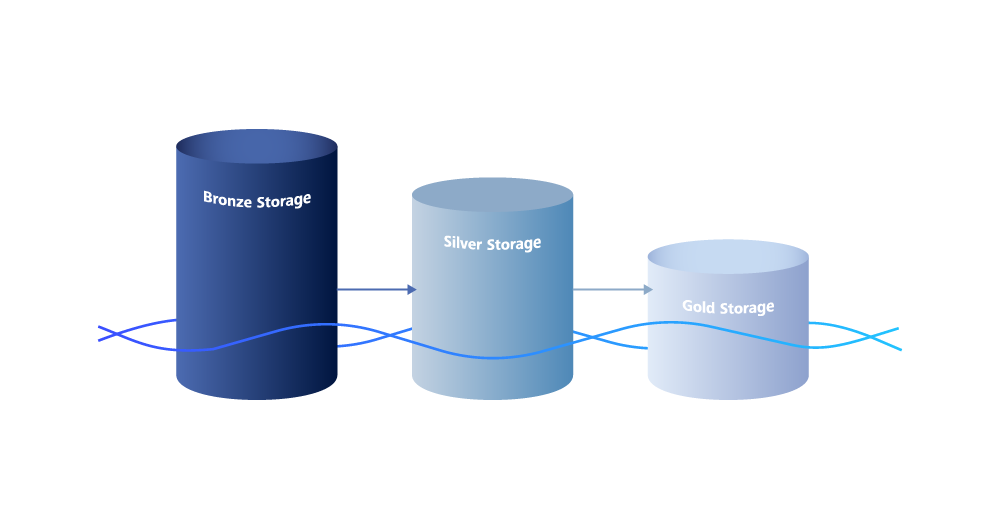

Bronze stage of medallion architecture

The medallion architecture in data platforms is an approach where data is organized in multiple layers to gradually improve its quality and usability. These layers include the bronze layer for raw data, the silver layer for adjusted data that corrects or removes erroneous, incomplete, or inconsistent data, and the gold layer for enriched data that has been further refined by adding additional information or by aggregation. This enables structured and efficient data processing.

An important aspect of data storage in a Data Lakehouse is the Bronze Stage of the Medaillion architecture. In this phase, the first recording of data from different sources is carried out (see Types of data sources). The data is stored in its raw format, which means that it has not yet been cleaned up or transformed. This Bronze Stage serves as a central repository for all incoming data and enables companies to preserve a complete history of their data.

The Bronze Stage is crucial for data ingestion, as it forms the basis for the subsequent stages (silver and gold) in which the data is further processed, enriched and optimized for analysis. By storing data in the bronze level, companies can access the original data at any time. This is particularly important for audits as it ensures transparency and traceability. For data analysis, access to raw data provides the flexibility to test new analysis methods or validate existing analyses by using the original data.

Conclusion

Data ingestion is a key component of industrial data platforms that enables data from multiple sources to be efficiently collected and stored for analysis. This is particularly important in the manufacturing industry, as large amounts of data must be processed in real time to optimize production processes. And storing data in a data lakehouse using efficient formats such as Parquet, Delta Lake, or Apache Iceberg in low-cost cloud object storage services offers numerous benefits. By implementing a medallion architecture with a clear bronze stage for data ingestion, companies can ensure that they build a robust and flexible data infrastructure that helps them gain valuable insight from their data and optimize their production processes.

Crucial for success is that the implementation of a data platform aligns with a company’s needs. After all, the value of an industrial data platform is not found in the data or platform itself, but in the use cases build on the platform and its data. These use cases are the best indication of which steps to start with when building such a platform. It is therefore advisable to prioritize these, e.g. based on a rapid return on investment (ROI) or quick wins. It is not necessary to implement all elements of the data platform right from the start, but to allow this infrastructure to grow in a sensible order.

This post was written by:

Christian Heinemann

Christian Heinemann is a graduate computer scientist and works as a solution architect at ZEISS Digital Innovation in Leipzig. His work focuses on the areas of distributed systems, cloud technologies and digitalization in manufacturing. Christian has more than 20 years of project experience in software development. He works together with various ZEISS units and external customers to develop and implement innovative solutions.